Abstract

This science-fiction legal Essay is set in the year 2030. It anticipates the development and mass adoption of a device called the "Ruby" that records everything a person does. By imagining how law and society would adjust to such a device, the Essay uncovers two surprising insights about public policy: first, policy debates are slow to change when a new technology pushes out the "Pareto frontier" and dramatically changes the benefits that society can achieve at little or no cost. Second, in direct contradiction to most free speech theory and precedent, the thought experiment shows that First Amendment goals could be better served by affording the right to collect information more protection than it currently gets and giving the right to disseminate information less emphasis.

Introduction

Seventeen years ago, Google loosed a group of “explorers” to expose their new Glass device to the world and to expose the world to it.1 The introduction did not go well. Every detail, right down to the rugged term “explorer,” was ripe for mockery. It looked like this:

Glass did many things that challenged conventional uses of technology: it had the first augmented reality display intended for constant use, created the first comprehensive wearable network, and used advanced voice recognition commands.2 But the most reviled feature by far was its recording function. Glass users could video and audio record everything they did with their faces.

Commenters called Glass “[t]he [c]reepiest [t]ech of the [m]illenium [sic],”3 a precursor to the inevitable surveillance state,4 and an assault on forgetting.5 Users of the product were called “little brothers” and “Glassholes.”6

Well, we are all Glassholes now. The Ruby and other personal network devices have made ubiquitous sensors and recorders of us all. Save for a few holdouts, we are now sold on the utility of constant recording. The benefits to health and safety alone make our adoption of the Ruby irreversible, although the convenience and cat videos are nice, too. Meanwhile, after a period of doubt, we have been convinced that the harms of constant recording are managed well enough using technological design, social norms, and cautious lawmaking that, fortunately for us all, did not keep up with technology.7

Given our complete conversion, it is startling to think that the video recording functionality of personal network devices could have been regulated out of existence fifteen years ago if Google had pursued its plan to mass market Glass. The objection from policymakers was couched in legalese like notice and consent, but the resistance is better understood through examining the more human concepts of memory and accountability.

Many feared that ubiquitous recording would bring the end of forgiveness, nonconformity, and redemption. Digital storage of our experiences would interfere with the natural formation of the “complicated self.”8 Human memory, the theory goes, struck the right balance between remembering and forgetting so that we could hold each other accountable while also giving each other a second chance. There was a growing sense among scholars that we were at a precipice during the era of Google Glass. As Viktor Meyer-Schönberger wrote, “the shift from forgetting to remembering is monumental, and if left unaddressed, it may cause grave consequences for us individually and for society as a whole.”9 But writing, cameras, personal computers, email, social media, and smart phones would have looked like shifts from forgetting to remembering, too.10 This should have alerted critics of Glass that many technologies tinker on a continuum of augmented memory, and that forgiveness and redemption can adapt.

The reemergence and acceptance of ubiquitous recording technology can teach us something about information law and tech policy going forward. This Essay focuses on two insights revealed by Google Glass.

First, contrary to popular sentiment, privacy law should lag behind technology.11 The Glass experience helped clarify one source of our instinctual resistance to new technologies. Status quo and antitechnology biases have been well documented for decades, and the prevailing explanation is that humans fear the unknown.12 But the experience with Glass suggests a more precise explanation for the knee-jerk rejection of some technologies. It is best explained by what engineers refer to as the “Pareto frontier.”13 In brief, people become accustomed to the state of technology as it exists, and they take for granted the tradeoffs that must be made to optimize two or more conflicting goals. At any given time, we make choices based on the greatest benefits and lowest costs that technology can permit. But new technologies expand the Pareto frontier. Before a technology is introduced, public policy debates emerge around difficult tradeoffs: some benefits cannot be achieved without significant costs. These are debates that occur at the Pareto frontier because there is no Pareto-optimal option: there is no way to get a benefit without incurring some sort of cost. But new technologies suddenly put more options on the table that were not available before. That is, they allow, for the first time, for certain benefits to be achieved without costs. They allow us to have cake and eat it, too.

The forecasts about the effects of Google Glass were tainted by the Pareto frontier fallacy. Scholars wrongly assumed that the benefits of enhanced data capture would be inseparable from the harms of enhanced data capture.14 They assumed that the sorts of recordings that help keep people accountable would also enslave them to conformity and mediocrity. They assumed that our choices were to remember everything or to stick with the memories we have. Today’s technology proved that this was a false choice—that we could harness some of the positive features of ubiquitous recording without having any meaningful negative consequences.15

That is not quite what happened, of course. Once the frontier was pushed out, we collectively settled on a new equilibrium that was responsible for some new problems for society. But in contrast to what was possible with old technologies, today’s devices allow us to harness most of the positive features of ubiquitous recording while hiving off most of the negative features. In the case of ubiquitous recording, this happens in a fairly obvious way: users can preserve what turns out to be useful and discard the rest.16

To be clear, I am not making an attitudinal distinction between techno-optimists and techno-pessimists. Optimists and pessimists fight over whether the glass is half-empty or half-full. The Pareto frontier bias causes people to continue bickering about whether the glass is half-empty or half-full when a new technology has poured in more water. Thus, when a new technology emerges that evidently solves some problem or satisfies some demand, regulators should give the technology some time to see whether costs that were once inextricably linked to benefits continue to be so.

The second lesson for information law surprised me: the experience with Glass has demonstrated that expanding First Amendment coverage to reach and protect a right to record is far more important to the health of information freedom than keeping up an exacting standard where it already applies. More specifically, for decades First Amendment case law strictly constrained the government from adopting restrictions on information dissemination while giving wide latitude to restrictions on information acquisition (such as recording audio and video).17 This rule had perverse effects on lawmaking. To avoid some disfavored information disclosures, the government could wipe out a much wider range of data collection without significant constitutional oversight.18 Thus, although I do not entirely agree with recent decisions upholding narrow public disclosure and cyberharassment laws against constitutional challenge,19 they are less bad than the statutes and administrative rules that made today’s recording technology illegal and very nearly strangled those innovations in the cradle. If these relatively recently enacted, narrow disclosure laws targeting some extreme forms of embarrassment and intimidation are the price to be paid for broad protection of recording and data acquisition, the constitutional bargain is a good one.

This evolution toward a First Amendment that is broad but not-too-thick was once thought to be the greatest sin in free speech theory. Many jurists and scholars favored constrained definitions of First Amendment “speech” or “content-based” regulations so that the protection of core political speech would not be watered down.20 But the government has a propensity to regulate outside the scope of recognized speech rights to indirectly achieve censorious goals. Laws that restricted recording were very popular at one time because many feared they would be harmed by some downstream data dissemination. If fears about certain types of information sharing are legitimate (and I will assume for a moment that they are), free speech values are far better served by regulations finely crafted to those concerns rather than bluntly targeting an entire recording technology.

This Essay describes our history with ubiquitous recording and its implications for the law of future communications technologies.

I. The History

A. A Failed Leap

Google Glass had a rough start, and not only because of its dorky design. The timing of the Glass rollout coincided with significant tension in the San Francisco Bay area between the locals and the gentrifiers (or, perhaps more accurately, between the earlier and later gentrifiers). Some of the teasing was harmless and even funny (like the website “White Men Wearing Google Glass”).21 But there were also a few serious incidents of aggression against Google explorers, who were presumed to have undeserved privilege, by local residents of San Francisco’s bohemian Mission District.22 Google Glass became a symbol for the debates about income inequality, merit, labor, and economic security that continue to trouble us today.

Class strife aside, scholars and journalists were immediately suspicious of the filming functionality of Google Glass. Even explorers and tech journalists who felt warmly toward Glass saw recording as its key problem. A Wired journalist reported: “Again and again, I made people very uncomfortable. That made me very uncomfortable. People get angry at Glass. They get angry at you for wearing Glass.”23 A New Yorker article put it this way:

Glass bypasses the familiar, disarming physical ritual of photography: when a person raises a camera, or a smartphone, everyone knows what it means. Somehow an indicator light seems insufficient to overcome perceptions of Glass as furtive and dishonest. Some businesses have asked Google Glass users to remove their wearables or leave.24

Kevin Sintumuang at the Wall Street Journal, favorably disposed toward Glass, admonished users not to be creepy: “All it’s going to take is for one Glass wearer to record or photograph someone or something that shouldn’t have been filmed to ruin Glass for everyone. Let’s not incite lawmakers or angry mobs.”25

The gears of the regulatory process were already turning. Glass was used as evidence for a plea to Congress to pass a broad-sweeping data privacy law in the style of the European Union’s Data Protection Directive.26 A self-appointed “privacy caucus” of members of Congress sent Google a letter demanding answers to questions like: “Can a non-user . . . opt out of this collection of personal data? If so, how? If not, why not?”27 It was as good an example as any of regulation by raised eyebrow.

The most significant problem to the privacy community was the recording, particularly of nonusers. This was so even though Google Glass was somewhat limited as a video camera. It only recorded video and sound prospectively, when a user said, “Ok Glass, take a video.” It had none of the retrospective collection functions to which we have grown accustomed. But the seamlessness of the act of recording, and the fact that the recording occurred from the Glass user’s eyes, had a distracting effect on people in the presence of Glass. The Glass user could be recording and saving and sharing everything.

Legal scholars were prepared to explain and justify the public distrust. Indeed, legal scholars had been preparing for this moment since 1890 when Samuel Warren and Louis Brandeis published the most influential privacy article of all time in reaction to the press and the handheld camera.28 Their explanation was that, on balance, technologies that interfere with natural memory loss cause more harm than good to the “inviolate personality” of those recorded.29

The anticipated harm has been given many different titles over the years but has always been the same: the end of redemption. With greatly enhanced memory, we will all be condemned in the unforgiving judgment of others. Julia Cohen has written extensively and quite beautifully about the conforming effects of constant recording.30 Anita Allen warned about the problems of “dredging up the past.”31 Andrew Tutt called it the revisability principle—“the principle that an individual’s identity should always remain, to some significant extent, revisable.” 32

The conforming effects of surveillance have been guiding lights for constitutional jurisprudence as well. An often-quoted dissent of Justice Harlan explained approvingly that “[m]uch off-hand exchange is easily forgotten and one may count on the obscurity of his remarks, protected by the very fact of a limited audience, and the likelihood that the listener will either overlook or forget what is said, as well as the listener’s inability to reformulate a conversation without having to contend with a documented record.”33

Whether the social and economic repercussions are based on an accurate appraisal of the person or based on a distortion34—based on some, but not the whole truth—the resulting policy prescription is the same: do not permit things to be recorded that have previously evaporated into safe obscurity.

The tension around video recording was defused when Google quietly withdrew Glass from the retail market and pursued other uses for the technology for four years. In response to the mounting resentment and regulatory attention to Glass, Google opted to focus on the receiving portion rather than the sensing and recording portion of Google Glass. Google poured its resources into improving the augmented reality and video game functions with limited recording.35 Some applications continued to use input data like Google Now, the software that provided location-aware notices,36 and the popular AllTheCooks app that guides users through recipes without taking their eyes off what they are doing.37 The most lucrative and cool by far were the surgery-related apps that guided doctors through precision procedures.38 But for several years, the development of persistent audio and video recording went underground. The public had no tolerance for the idea. Glass had failed.

But, to quote Henry Petroski, “form follows failure.”39

B. A Successful Crawl

It took ten years for the concept of potentially perpetual video recording to resurface. Many technologies helped it along. First, people began to embrace the “quantified self” for personal health and safety purposes. Fitbits and other simple wearable devices first tracked users’ footsteps, and then their heart rates and exertion in all activities. But health consumers wanted to monitor input as well as output—that is, their food consumption in addition to their exercise. Countertop diet robots like Autom became popular mechanical weight loss coaches.40 At first, the data entry was clunky. Autom would ask you, with increasing insistence if you became nonresponsive, about what you have eaten and how much exercise you have gotten. In the second generation, Autom was fitted with cameras and other sensors so that users could scan their food and input the information more passively.

The market had by this time offered other types of automated advisors and personal assistants, some that collected very sensitive data. A Medtronic wearable device developed in 2015, for example, had electrodes that were inserted under the user’s skin to monitor glucose and fluid levels so that it could predict hypoglycemic events a few hours before they occurred.41 Medtronic used IBM’s Watson software to advise users about what to do (for example, take a walk or eat some blueberries) to manage glucose in a hypercustomized way. But Autom2 was the first to showcase the utility of video input for purposes other than sharing videos.

There were two other technological developments relevant to the shift in public norms around recording. First, our automobiles were becoming more and more autonomous, which required more and more sensors, LIDAR, and yes, video cameras.42 The auto industry got ahead of the privacy concerns by educating the public about the data handling practices (such as the automatic deletion of data after a certain period of time unless the vehicle was in an accident). It compared the video capture from cars to the audio capture that the smart phones had been using for years: in order to recognize voice commands, a phone had to be listening to everything. The trick was to commit very little to permanent memory. The industry also succeeded in its campaign for receptive attitudes ahead of the rollout of its technology. It convinced the public well before automation was broadly available that the data collection was necessary for a car to act as a guardian angel over its driver and passengers.

The other X-factor was the body-worn camera, which had around this same time taken off as a means of accountability for police. In particular, the most popular model of camera (made by Taser) used the form of video recording to which we are now accustomed. Except when the camera was turned off, it constantly recorded. The data was automatically dumped unless the officer pressed the record button. The key advantage of this system is obvious to us now—it permitted retroactive recording. If an officer found himself in an unexpected or suddenly tense situation, pressing the record button would capture and save not only everything that happened after pressing the button but also for the thirty seconds that preceded the engagement.

Thus, by 2025, consumers were less cautious about the concept of video recording. Indeed, consumers were hankering for video technology on their wearable devices so that they could capture their food, medicine, and other information without taking out their phones—in fact, without doing much of anything at all.

The first popular wearable assistant with an unobtrusive camera to hit the market was OrCam’s “MyMe.”43 The MyMe had two components: a camera that clipped to users’ shirts, and an ear piece that communicated with the user.

Like the Ruby, the MyMe’s video camera was always on as a default. But unlike the Ruby, it automatically dumped the video data. To avoid the privacy controversies that Glass faced, OrCam opted to ensure that its video camera was used only to help the user quantify information related to himself. So, it could not be used as a camera, not even if the user and everyone around him wanted it to. Instead, MyMe would use video images to log certain types of information (like food consumption). It also created “word cloud” memories that prepared a suggestive cluster of words about the people or events that the user encountered.44 MyMe fully embraced “privacy by design.”45

Thus, when the Ruby came on the market in 2026, the privacy community compared it unfavorably to the MyMe. The Ruby, as we all know, used a bracelet design like the FitBit. It was fitted with seven tiny video cameras with fish-eye lenses to capture almost everything around the user (albeit in grainier quality than the cameras on smartphones).

Ruby embraced the concept of data expiry that was endorsed in the work of Viktor Mayer- Schönberger46 and was operationalized in body-worn cameras and Snapchat. As a default, Ruby recorded everything, sent the data to the cloud, and automatically dumped the data after a week. But the user could adjust the default in two ways: they could change how often the data is dumped and they could save individual recordings by marking them with a voice command in real-time. (The user could also find video recordings that were stored in the cloud and not yet expired, though in practice people rarely bother to do this.) Just like the Taser body-worn cameras, the marking command automatically saved and archived the recordings that occurred thirty seconds prior to the request to record. Ruby never provided video data to third parties without a court order and also did some screening of third-party app developers.47

Most consumers distrusted the ubiquitous recording function of Ruby when it was first introduced, but two things helped establish its dominance among wearable network devices. First, the cat videos. They became so much better. So did the dog and kid and other Youtube viral videos, and all for the same reason: although smartphones made video recording easy, they were still not recording during the vast majority of hilarious, touching, cute, and otherwise amazing moments.

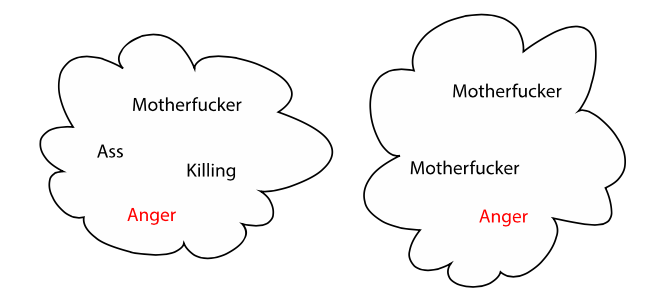

Then, Ruby was helped along by a serendipitous moment: a bar fight between two well-known hot-headed college athletes. The incident was captured by somebody wearing a Ruby. Despite the video, it was still difficult for the public to agree on which of the men in the video was more responsible for the escalation. One man said something that was inaudible, the second then yelled, “You motherfucker! Take it back or I’m going to kill your sorry ass.” The first yelled over him saying, “You’re right I’m a motherfucker—the motherfucker that is going to put you in your motherfucking place.”

Had the Ruby video been the only thing recorded that night, it probably would have prompted another discussion about how even video technology can miss critical facts and leave too much to the eye of the beholder.48 But that sort of commentary was undercut because another person at the bar, a friend of the Ruby-user, was wearing a MyMe. The MyMe’s word cloud memory of the event looked like this:

The comparison between the two clinched the Ruby as the more useful personal tool—far from omniscient, but preferable nonetheless.

II. The Consequences

Ruby and other personal networks with video recording helped fill mortal memory gaps in ways that were both helpful and harmful. By now, it is difficult to argue with the observation that the benefits far outweigh the costs.

A. Abundant Benefits

The benefits of ubiquitous recording technologies include a wide range of health and time-saving innovations, from food and prescription live-logging to real-time price comparisons. One improvement that has received a lot of attention is the sharp reduction in accidental poisonings, which was once the most common accidental cause of death (ahead of traffic accidents).49 The Ruby’s cameras and processing are sensitive enough to flag overdoses of prescription and over-the-counter drugs.

Of course, some of these apps could run without saving all or most of the video data, so I will focus here on the uses of the Ruby that took advantage of the automatic short-term storage of video files and the relatively easy retroactive archiving of that video.

First, many of the most popular Ruby apps allow users to pool their video data (in deidentified form) for research and optimization, much like how the app Waze once used real time geo-location data to optimize its directions and outperform competing services. These cooperative apps as they have come to be known have allowed Ruby to swiftly improve its software’s video recognition since users can report when the app has misidentified its footage. The cooperative apps have also helped specific app developers improve their algorithms for recommendations and guidance by learning from the data of other users.

The retroactive archiving function has been extremely valuable during medical emergencies since hospital residents could access video to observe the patient right before (sometimes days before) the medical emergency.

And then there is the benefit for news and evidence gathering. Criminal cases and political controversies have been enhanced by retroactive video archival for the very same reasons that cat videos have gotten so good: Passive recording ensures that unexpected things are captured. The effects on police accountability and use of force are particularly telling: with “little brother”50 turned on the police officers, uses of force have dropped in frequency so that they much better correspond to the actual threat that suspects pose to officers and to the public.

This phenomenon is easily taken for granted, but a slice in history can help illustrate the significance. In the summer of 2014, a white police officer in Ferguson, Missouri named Darren Wilson shot and killed a young unarmed black man named Michael Brown. The incident, and a grand jury’s decision not to indict Officer Wilson, set off national protests, conversations, and police reforms to address racial bias in policing. The eyewitnesses to the shooting had conflicting testimony about whether Michael Brown was charging toward Officer Wilson when he decided to fire ten shots.51 No video evidence was available. But some of the most damning evidence against Officer Wilson in the court of public opinion came from an audio recording that captured the sounds of six gunshots fired in quick succession, then a pause, followed by four more shots.52 A resident in a nearby apartment complex happened to be engaged in a video chat with his paramour at the time of the shooting. The sounds of the gunshots and the troubling pause between them were caught by dumb luck behind the voice of the apartment resident saying: “You are pretty. You’re so fine . . . .”53

To be sure, the video and audio recordings from Ruby are far from a perfect register of reality. If the measuring stick for evidence is absolute truth, nothing will ever satisfy the standard. But there are forms of evidence that are better and worse at reducing noise and doubt, and Ruby is one of the better ones.

Ruby also proved our romantic instincts about human memory wrong. The personal network device used passive data collection to automatically schedule events on our calendars, to store contact information for later use, and to recall our preferences about products and services. There is nothing poetic or honorable about forgetting these types of mundane details—mechanical memory is quite appropriate for them. In fact, it saves human memory for more important things. The Ruby saved us from tedious remembering, and, at the same time, conserved some forms of memory that we have sentimental and thoroughly human reasons to want to keep forever. The numerous touching accounts of Ruby’s role preserving the voice, mannerisms, and last good days of family members who passed away should challenge any simple argument in favor of forgetting.

Finally, there is the monumental reduction in violent crime that empirical research has credited to the Ruby. The Ruby’s retroactive video archiving is so powerful in deterring the use of violence and coercion that it took only a small proportion of early Ruby adopters to provide “herd immunity” to the people around them.54 The technology was much more effective than security cameras because it collected more information (including sound) and always stayed with the victim.55 For those with the 9-1-1 app, screams from the victim would trigger an automatic call to emergency services. Criminals had to take bigger risks to attempt their crimes. For example, muggers and armed robbers had to don ski caps much earlier in the process, announcing to onlookers that a crime would soon occur. It did not take long for social workers to recognize this trend and start devoting resources to the provision of Ruby devices to working mothers and their children who resided in crime-prone neighborhoods. This is, admittedly, a shade of conformity. And since conformity has received reflexive scorn from the intellectual community, the benefits of rapid decreases in violent crime have been minimized in the scholarly literature.

Many have criticized attempts (like this one) to compare benefits and costs, either because they are incommensurate or because the calculations are likely to go awry. One wanders into dangerous territory any time they compare the reductions in severe but relatively rare crimes on one hand to the countless privacy violations that can also reduce autonomy and quality of life on the other.56 But while the critiques are relevant in a static environment, they contain the fallacy of the Pareto frontier when applied to emerging technology. Our experience with Ruby has demonstrated that we need not take all of the bitter with the sweet. The typical Ruby user has harnessed accountability consistently where society is in general agreement that accountability is a good thing. Where society does not have any such consensus, the shaming and tattling potential of the Ruby has been used sparingly.

B. Limited Damage

If the predictions of public intellectuals had been correct, ubiquitous recording would have thrown us all into a state of distress and reputational poverty by now.57 But it has not worked out that way. The powers of social norms and semi-rational self-interest have kept us off that dire course for the most part.

Although some Ruby users have changed the default settings to archive all the recorded video (and have paid for premium storage service to do so), that choice is rarely made, and these users do not seem to be the ones who are causing problems. There are jerks—especially teenagers—who use their Rubies to shame others for embarrassing conduct. These problems are reminiscent of similar growing pains when YouTube was new. The “Star Wars Kid,” for example, gained unwelcome notoriety for his famously dorky reenactment of a light saber fight scene that unexpectedly wound up on the Internet.58 He was the involuntary star of one of the first viral videos. Likewise, a few unlucky people have become the involuntary stars of Ruby retroactive video archiving. These unlucky ones have been mercilessly mocked for perfectly ordinary clumsiness, perfectly natural flatulence, and perfectly common mistakes.59

The victims of viral video shaming were small in number, though that is hardly comforting to them. The vast majority of Ruby users complied with the social norms that preserve the dignity and self-determination of others. Most users who do post videos publically take advantage of Ruby’s face-blurring software for nonconsenting subjects.60 And when video is used against the interests of its subjects, the Ruby recorder typically receives more disapprobation than their subject.

The larger problem by far was anxiety. We all felt it. We worried about the possibility of having one of our less flattering moments live on in the digital forever, threatening to expose us if not to the whole public, then to the people who matter most. Even I felt anxious under the weight of constant self-monitoring, and I had been relatively sanguine about recording technology.61 For a little while, it is only fair to admit, we engaged in the very self-censorship and conformity that my privacy law colleagues had predicted. We were subjects in a discomforting social experiment, and the Hawthorne Effect was strong.62 But it also wore off, as it tends to do.63

After a period had passed without significant disruption, people began to relax in the presence of other peoples’ Rubies. We slowly realized that we had not devolved into a society of paparazzi. I noticed that when I tripped, said something boneheaded, or did something else regretful but forgivable, others had the courtesy and decency to do what they had always done. They pretended not to notice. And I held my end of the social contract as well: I also made sure that their human frailties would not be permitted to haunt them. At the same time, Ruby users could also feel more confident that people who interacted with them were less likely to yell at them, to lie, and to tell risky or inappropriate jokes. Reasonable minds disagree about whether this type of reserve and conformity should count as harm or benefit, and I admit that I have no strong convictions, either.64

The learning curve with the Ruby turned out to be pretty consistent with the temporary growing pains of other new communications technologies. In the early years of social media (even before Facebook), some employers overreacted to the new treasure trove of information from social media. Stacy Snyder became a poster child for the harms of social media when she was denied a teaching certificate after posting a picture of herself in a costume holding a red plastic cup with the caption “drunken pirate.”65 A few other unlucky teachers were fired for similarly barely-offensive conduct. Viktor Mayer-Schönberger, Daniel Solove, and others held up these examples of disproportionate punishment as the typical results of an “overexposed” world.66 But these were not typical results. Employers learned to monitor and rely on social media less.67

In fact, what we knew about employee monitoring fifteen years ago probably could have helped us predict that Ruby would not have been as devastating to our egos and creativity as we had feared. In the early 2000s, many employers who used video surveillance on their staff (including body-worn cameras for police) found that the recording caused inhibitions, caution, and overcorrection that got in the way of good work. Workers in some conditions fastidiously carried out protocols rather than using good judgment. But employers quickly learned that the negative effects of video recording could be avoided while still preserving the positive qualities if they used the recordings wisely. Trucking and delivery companies like UPS, for example, used sensors and video cameras to track every little move of their drivers, but the “coaches” who reviewed the data with the employees were prohibited from passing the information on to managers for demotion or firing decisions unless the driver was engaged in willful illegal conduct.68 The medical profession has had similarly good experiences with Grantcharov’s “black box” which records operations and helps surgeons improve their performance.69 Unless arrangements are made by the patient or hospital in advance, no data is preserved for litigation or human resources purposes. Couples and their marriage counselors are also using video recordings for self-improvement and self-awareness training purposes (though some therapists advise against it).

In short, like other forms of surreptitious recording, Ruby undeniably creates opportunities to make others and ourselves more miserable. But few of us choose to become punishers. Far from bringing the end of forgetting, Ruby actually ushered in an era of thoughtful forgetting.

C. The Law

Long ago, Danah Boyd correctly predicted that social norms solve the social problems from technology shocks better than law. “People, particularly younger people, are going to come up with coping mechanisms. That’s going to be the shift, not any intervention by a governmental or technological body.”70

Although comprehensive privacy legislation that would have given people a right to own and control information about them has been introduced in Congress three times since the popularization of the Ruby,71 none of these bills have made it out of committee. Instead, statutes and case law have solved specific problems in piecemeal fashion.

In the context of criminal investigations, the Stored Communication Act was amended to protect all data stored in the cloud regardless of its age so that police would always be required to get a court order before accessing privately held data. (The original statute made distinctions between data stored for more or less than six months.72) That amendment was made mostly moot by U.S. Supreme Court precedent clarifying the scope of the third-party doctrine and requiring a full probable-cause warrant in order for police to access cloud data controlled by suspects and stored by third-party service providers like Ruby.

To curb abuses by private actors, state harassment and privacy laws were strengthened to protect the subjects (mostly women) of revenge porn, up-skirt videography, and threatening communications. Recent state law tort claims based on intrusion and the public disclosure of private facts survived First Amendment challenges when the offending recordings focused on the most prurient and scatological aspects of human life.73 The mere potential for the Ruby to be used for humiliation persuaded several federal circuit courts that a line between minimally useful speech and purely wasteful videos could be drawn. “These are attributes that people view as deeply primordial, and their exposure often creates embarrassment and humiliation. Grief, suffering, trauma, injury, nudity, sex, urination, and defecation all involve primal aspects of our lives—ones that are physical, instinctual, and necessary.”74

But while the cases penalizing disclosure were succeeding, the cases that attempted to punish recording started losing. This is most obvious in the states that have so-called “all-party” wiretap laws. In these states, in contrast to the federal wiretap law, residents are not permitted to record a private conversation unless all parties involved have consented to the recording. These laws were already on shaky ground because of earlier First Amendment precedent recognizing an interest in video recording in public. To avoid friction either with the Constitution or with public expectations now that the Ruby has become so popular, state courts have been carefully reinterpreting their wiretap statutes so that no standard Ruby use is criminalized. So long as the Ruby records only what its user could see and hear, courts are clearly motivated to find that some part of the state prohibition will fail. For the intrusion tort, courts have easily worked within the elements to find that the recordings are not “highly offensive to a reasonable person.”75 Wiretap statutes required a little more interpretive ingenuity. The most common way around the language of the statute was to claim that conversations recorded by Rubies were not “private.”

Thus, the Ruby prompted a very interesting shift in information law. In previous decades, the basic legal scheme clamped down strongly on recording and data capture, but permitted (by constitutional necessity) anything that did happen to be captured to be disseminated freely. This compromise between privacy and speech interests is starting to find its way to a new equilibrium. Courts are relaxing both the privacy laws against recording and the free speech rights to disclose.

III. Lessons for Law

Lawmakers’ reactions to Google Glass show the dangers of regulatory anticipation. The Federal Trade Commission did a disservice to the very consumers they were trying to protect when they strongly urged Google to reverse its course with Glass.

I am not certain why Google acceded to public and regulatory pressure. One theory is that Google was by that point too large a company to push ahead in the face of significant public resistance, even though it should have known that the technology would eventually win over consumers. This story is consistent with market cycles—a newcomer like Ruby had the courage of a young company with nothing to lose. A more plausible theory, though, is that Google suffered from a winner’s curse. It was a large and largely feared company. If it had pushed ahead with Glass, it would have been sure to be punished by regulators.76 Its transgression would have triggered public support for an EU-style comprehensive privacy law, and the Ruby and similar technologies would have been illegal from inception. By this account, Google did the world a favor by clearing out of the ubiquitous recording market.

In any event, a fortunate set of events left us with much more modest information laws. We now have a handful of narrow amendments and variations on existing law rather than a comprehensive European-style privacy law that would have made the Ruby illegal at inception. This history has significance for future information regulations.

A. Pushing the Pareto Frontier

It is obvious now that fears of Google Glass were premised on a false assumption that we were operating on a Pareto frontier. If lawmakers permitted an increase in recordings that could help the Glass user or Google, then others would necessarily suffer. In fact, the Glass user was often portrayed as one of the unintended victims: if Glass permitted the user to keep track of all the little details that lead to good decisions, it also forced the user to keep track of all the little details that lead to bad decisions. Glass critics saw Google’s obvious interest in the enterprise as per se evidence that Google’s gain would be made possible through everybody else’s losses.77

Without innovations in data management, the critics could have been correct. If we had to store and sift through all of our video recordings to form opinions and make decisions, perhaps we would be better off recording very little. But technology can and does build in features to separate these costs and benefits very well, and social norms do most of the rest of the work.

The 9-1-1 app mentioned earlier provides a perfect example. When the app hears the sound of a particular type of human scream or the sound of a gunshot, it automatically alerts emergency services. In another era, this would not have been possible. Our choices would have been to use microphones to let the police and emergency services listen to everything or to not permit any listening at all. But the Ruby does not have to treat all information uniformly. It can automatically scan and filter its audio recordings to report only the ones that are most salient while protecting the public from the surveillance of innocent activities.78

In my view, the bigger regrets are the lost opportunities—the costs we have suffered from the restrictions on Ruby and from its slow adoption. For example, Fourth Amendment law now requires a warrant based on probable cause for police to access Ruby videos from Ruby users or their service providers. I have argued before that this requirement harms civil liberties more than it supports them. A better policy would permit police to access video footage from Ruby users who were near the scene of a crime at the time it occurred. This would foster what I have called “crime-out” investigations that use information from a crime to identify a suspect (as opposed to “suspect-in” investigations that use information about a suspect to link him to crime). But since this is not an option without meeting the high standards of probable cause, police units have instead increased the use of surveillance cameras (providing access to all sorts of irrelevant innocent activity) and have continued to rely on unreliable eyewitness accounts.

Similarly, long ago, scholars urged legislatures to consider using surveillance in lieu of pretrial detention in jails.79 The arguments could be extended to using surveillance as an alternative to incarceration even for those convicted of crime. The Ruby or some similar technology could have started to curb over-incarceration and promote rehabilitation long ago by trading prison time for 24-hour monitoring. But a coalition of privacy advocates and private prisons (strange bedfellows to be sure) persuaded lawmakers to abandon the idea.

Thinking at the Pareto frontier causes people to not recognize when technology has eased tensions between interests that used to compete. It causes people to overlook that costs and benefits have been separated. Given this human tendency, the law is better off taking a slower course that emerges in time rather than a technocratic approach that designs in advance. And more generally, regulators should hold lightly their predictions about the problems caused by advances in technology.80

Helen Nissenbaum recognized the risks of public hostility to new privacy-invading technologies in her pioneering work Privacy in Context.81 Nissenbaum therefore starts her analysis of new technologies with a rebuttable presumption in favor of entrenched practices “based on the belief that they are likely to reflect settled accommodation among diverse claims and interests.”82 Compared to most of the theoretical work on the law of new technology, her approach is refreshing because it allows for the possibility that entrenched practices do not serve even the people who we assume they do. I would simply design the presumption the other way. For the category of technologies that clearly add some value to society—that solve some problem in a cost-effective or novel way—the default from a regulatory perspective should be to allow the introduction of the technology and design proscriptions and prohibitions only when they are needed to restore balance or redistribute benefits. After all, norms will have a strong, reactionary influence on the pace of technological adoption as it is. The law need not pile on.

B. Free Speech Law

Free speech law makes a strong distinction between information gathering and information dissemination that is not as principled or sound as it once appeared.

For example, in 2001, the Supreme Court refused to apply a federal wiretap law criminalizing the disclosure of private, illegally intercepted conversations to a recording of a conversation involving matters of “public concern.”83 The Court was unmoved by arguments that the restrictions on disclosures affected only illegally acquired information because that conduct—the interceptions—could be deterred instead. But once a person or organization has acquired information, “state action to punish the publication of truthful information seldom can satisfy constitutional standards.”84 Why is it, though, that information acquisition could be so casually restricted? If sharing matters of public concern is so critical to the First Amendment purpose, an illegal interception to a phone call that happens to produce valuable public information should be just as carefully guarded as an illegal dissemination that happens to contain valuable information.

Scholars have adopted the Supreme Court’s framework unthinkingly.85 It explains why Jeff Rosen, whose book The Unwanted Gaze strongly criticized the increase in information collection, could also criticize the European right to be forgotten.86 Censoring the Internet follows the classic model for information-quashing. Yet how else are recording bans justified, except for their effects on thoughts, opinions, and communications?

The Glass experience has taught me that we have been approaching information regulation backwards. If distasteful videos and other disfavored speech are going to move the wheels of lawmakers, restrictions of pure speech will often be more narrowly tailored and less restrictive on the overall information environment than restrictions on information acquisition.

This observation gives me some trepidation. It can be misinterpreted as an invitation for governments to censor pornography, insults, and other sorts of offensive speech. I do not intend to promote any broad restrictions on information dissemination. As First Amendment protection begins to expand so that information collection and even information uses receive some judicial scrutiny, however, courts have given the speech rights enough give to accommodate practical, narrow regulations that honor timeless interests in the “right to be let alone.”87 (The ban on up-skirt photography and the continued validity of single-party wiretap laws are some examples.) If we have confidence that legislators can sort out the state interests and draft appropriate prohibitions on data collection, I see no reason to have overwhelming doubts about their powers with respect to dissemination laws, too. Most likely, both should be met with heightened, passable scrutiny.

Conclusions From 2016

Somebody—either Niels Bohr or Yogi Berra88—once said that prediction is tough, especially about the future. I hold no delusions about my own powers of prophecy. So, to cope with the low likelihood that even my vague predictions will be correct, I have written an account that is so absurdly specific it is certain to be wildly wrong.

This account certainly falls somewhere in the range of wish fulfillment fantasies for me. But I did abide by a few rules. I wanted to think through the legal implications of a Glass-style device that video recorded and saved everything even though I thought (and still think) that such a device will not be permitted to come onto the market. So to make it an instructive exercise, I held myself to the task of imagining a future that is possible, even if implausible. Thus, not all of the technical or legal details are consistent with my vision for the good.

Second, I forced myself to devote more concentration to the things that ran against my natural biases. Specifically, I committed to thinking through the uses of Ruby that would be obnoxious to me. In the end, I decided that my fears about the new technology had similar qualities to moral panics that accompanied other new forms of communications technology. Thus, this Essay dismisses the severity of the harms for the most part. But I must admit, the exercise made me very nervous—appropriately nervous, I should say, since all predictions of the future should come with enormous error bars. I also committed to thinking about the laws that would likely develop in the wake of a ubiquitous recording technology and ask myself “is this regulation really so terrible?” To my surprise, I found some of the laws that would most clearly conflict with existing First Amendment precedent to be far less harmful for the progress of human knowledge than the privacy laws that arguably steer clear of First Amendment precedent. This led me to the unorthodox free speech arguments I made in the last section. I am still unsettled by the arguments I make here, but perhaps by 2030 I will feel better about them.

Intellectual discomfort aside, this Essay should be seen as what it is: self-serving wishful thinking. My account of the Ruby serves me as a scholar by playing out some of the policy arguments I have made in previous work. It serves me as a consumer because I am an avid photographer and data researcher, and find value and satisfaction from activities that violate some of the more strict conceptions of privacy. And it serves me as an idiosyncratic human, too. While many of my peers have profound affection for forgetting, my emotional response is different. To me, forgetting is a tyrant. It takes away details and leaves shapeless impressions. It takes the things that are inconsistent with what I already think or want to believe. It steals more objectivity than it gives.

[1]. Frederic Lardinois, Google Glass Explorer Program Shuts Down, Team Now Reports to Tony Fadell, [small-caps]TechCrunch[end-small-caps] (Jan. 15, 2015), https://techcrunch.com/2015/01/15/google-glass-exits-x-labs-as-explorer-program-shuts-down-team-now-reports-to-tony-fadell/ [https://perma.cc/CR4U-CZZS].

[2]. This capability is also known as NLU or “natural language understanding.” Leonard Klie, Speech Is Set to Dominate the Wearables Market, [small-caps]Speech Tech.[end-small-caps] (May 1, 2014), http://www.speechtechmag.com/Articles/Editorial/Cover-Story/Speech-Is-Set-to-Dominate-the-Wearables-Market-96593.aspx [https://perma.cc/M2XE-QEQJ].

[3]. Ryan Tate, Google’s New Cyborg Glasses Are the Creepiest Tech of the Millenium, [small-caps]Gawker [end-small-caps](Apr. 4, 2012, 1:33 PM), http://gawker.com/5899109/googles-new-cyborg-glasses-are-the-creepiest-tech-of-the-millenium [https://perma.cc/3TXS-HVJZ].

[4]. Kashmir Hill, How Google Glasses Make a Persistent, Pervasive Surveillance State Inevitable, [small-caps]Forbes[end-small-caps] (Apr. 6, 2012, 11:16 AM), http://www.forbes.com/sites/kashmirhill/2012/04/06/how-google-glasses-make-a-persistent-pervasive-surveillance-state-inevitable/#6f419c024a85 [https://perma.cc/NHK3-5ZU7].

[5]. Teo Yu Siang, Forgetting to Forget: How Google Glass Complicates Social Interactions, [small-caps]Folio [end-small-caps](Jan. 21, 2015), https://foliojournal.wordpress.com/2015/01/21/forgetting-to-forget-how-google-glass-complicates-social-interactions-by-teo-yu-siang/ [https://perma.cc/QAX8-A2F9].

[6]. Raegan Macdonald, With Google Glass, Everyone Can Be Little Brother, [small-caps]Access Now[end-small-caps] (Apr. 3, 2013, 7:23 PM), https://www.accessnow.org/with-google-glass-everyone-is-big-brother/ [https://perma.cc/CDB6-UCKQ]; Matt Burns, Google Explains How Not to Be a Glasshole, [small-caps]TechCrunch[end-small-caps] (Feb. 18, 2014), https://techcrunch.com/2014/02/18/google-explains-how-not-to-be-a-glasshole/ [https://perma.cc/2UGD-KMNE].

[7]. Vivek Wadhwa, Laws and Ethics Can’t Keep Pace With Technology, [small-caps]MIT Tech. Rev. [end-small-caps](Apr. 15, 2014), https://www.technologyreview.com/s/526401/laws-and-ethics-cant-keep-pace-with-technology/ [https://perma.cc/8USU-DP2D].

[8]. [small-caps]Daniel J. Solove, The Future of Reputation: Gossip, Rumor, and Privacy on the Internet [end-small-caps]68, 72–73 (2007).

[9]. [small-caps]Viktor Mayer-Schönberger, Delete: The Virtue of Forgetting in the Digital Age [end-small-caps]14 (2009).

[10]. Meyer-Schönberger acknowledged these, but claimed that none had actually toggled the default from forgetting to remembering. Id. at 33–49 (“Yet, fundamentally remembering remained expensive.”). Yet, later in the book, the fundamental problems he associates with digital memory are changes to the relative power of actors and distortion—that it does not actually provide a complete picture. Id. at 97–127 (describing the problems in terms of “power” and “time”). Thus, it is not clear why today’s technology makes a fundamentally different shift in power or distortion from the shifts that came before from other communications technologies.

[11]. See Lyria Bennett Moses, Recurring Dilemmas: The Law’s Race to Keep Up With Technological Change, 2007[small-caps] U. Ill. J.L. Tech. & Pol’y[end-small-caps] 239 (2007); Charles Ornstein, Privacy Not Included: Federal Law Lags Behind New Tech, [small-caps]ProPublica[end-small-caps] (Nov. 17, 2015, 11:00 AM), https://www.propublica.org/article/privacy-not-included-federal-law-lags-behind-new-tech [https://perma.cc/V6TQ-L6UR].

[12]. [small-caps]Bryan Caplan, The Myth of the Rational Voter [end-small-caps]65–66 (2007) (technology); Daniel Kahneman et al., Anompalies: The Endowment Effect, Loss Aversion, and Status Quo Bias, 5 [small-caps]J. Econ. Perspectives[end-small-caps] 193, 197–99 (1991).

[13]. See Nate Silver, Marco Rubio and the Pareto Frontier, [small-caps]FiveThirtyEight [end-small-caps](Feb. 19, 2015, 6:04 AM), http://fivethirtyeight.com/features/marco-rubio-and-the-pareto-frontier/ [https://perma.cc/9759-H58J].

[14]. “Until recently, the fact that remembering has always been at least a little bit harder than forgetting helped us humans avoid the fundamental question of whether we would like to remember everything forever if we could.” [small-caps]Meyer-Schönberger[end-small-caps], supra note 9, at 49 (emphasis added).

[15]. This is what the MyMe aimed to do. See infra Part I.B.

[16]. Part II addresses concerns that users will make themselves or others miserable. See infra Part II.

[17]. Bartnicki v. Vopper, 532 U.S. 514, 529–30 (2001) (treating the prohibition of intercepting and recording a private phone call as regulations of conduct and treating the prohibition of disseminating of those recordings as regulations of speech).

[18]. See generally Seth Kreimer, Pervasive Image Capture and the First Amendment: Memory, Discourse, and the Right to Record, 159 [small-caps]U. Penn. L. Rev.[end-small-caps] 335 (2011); Jane Bambauer, Is Data Speech?, 66 [small-caps]Stan. L. Rev. [end-small-caps]57 (2013); Alan Chen & Justin Marceau, Free Speech and Democracy in the Video Age, 69 [small-caps]Vand. L. Rev. [end-small-caps]1435 (2015).

[19]. United States v. Sayer, 748 F.3d 425 (1st Cir. 2014).

[20]. See, e.g., R.A.V. v. St. Paul, 505 U.S. 377, 415 (J. Blackmun, concurring); Vincent Blasi, The Pathological Perspective and the First Amendment, 85 [small-caps]Colum. L. Rev. [end-small-caps]449, 452 (1985); Philip Hamburger, More Is Less, 90 [small-caps]Va. L. Rev. [end-small-caps]835 (2004); Kenneth L. Karst, The Freedom of Intimate Association, 89 [small-caps]Yale L.J. [end-small-caps]624, 654 (1980); Kyle Langvardt, The Doctrinal Toll of “Information as Speech”, 47 [small-caps]Loy. U. Chi. L.J.[end-small-caps] 761, 763–64 (2016). But see John D. Inazu, More Is More: Strengthening Free Exercise, Speech, and Association, 99 [small-caps]Minn. L. Rev. [end-small-caps]485 (2014).

[21]. White Men Wearing Google Glass, [small-caps]Tumblr[end-small-caps], http://whitemenwearinggoogleglass.tumblr.com/ [https://perma.cc/J5TQ-WY77].

[22]. Kyle Russell, I Was Assaulted for Wearing Google Glass in the Wrong Part of San Francisco, [small-caps]Bus. Insider[end-small-caps] (Apr. 13, 2014, 12:22 AM), http://www.businessinsider.com/i-was-assaulted-for-wearing-google-glass-2014-4 [https://perma.cc/59RZ-NX3E]; Robin Wilkey, Woman Attacked for Wearing Google Glass at San Francisco Dive Bar, [small-caps]Huffington Post [end-small-caps](Feb. 25, 2014, 1:36 PM), http://www.huffingtonpost.com/2014/02/25/woman-attacked-google-glass_n_4854442.html [https://perma.cc/BUD5-UU8Z].

[23]. Mat Honan, I, Glasshole: My Year With Google Glass, [small-caps]Wired [end-small-caps](Dec. 30, 2013, 6:30 AM), http://www.wired.com/2013/12/glasshole/ [https://perma.cc/MBK8-JJTV].

[24]. Anisse Gross, What’s the Problem With Google Glass?, [small-caps]New Yorker [end-small-caps](Mar. 4, 2014), http://www.newyorker.com/business/currency/whats-the-problem-with-google-glass [https://perma.cc/L67F-YH9U].

[25]. Kevin Sintumuang, Google Glass: An Etiquette Guide, [small-caps]Wall Street J. [end-small-caps](May 3, 2013), http://www.wsj.com/articles/SB10001424127887323982704578453031054200120 [https://perma.cc/RLB4-T9WU].

[26]. Directive 95/46/EC, of the European Parliament and of the Council of 24 October 1995 on the Protection of Individuals With Regard to the Processing of Personal Data and on the Free Movement of Such Data, 1995 O.J. (L 281).

[27]. Letter from Rep. Joe Barton et al., U.S. Congress, to Larry Page, Chief Exec. Officer, Google (May 16, 2013), https://joebarton.house.gov/images/user_images/gt/GoogleGlassLtr_051613.pdf [https://perma.cc/8C3C-V6Z9].

[28]. Samuel Warren & Louis Brandeis, The Right to Privacy, 4 [small-caps]Harv. L. Rev. [end-small-caps]193 (1890); see also Neil M. Richards, The Puzzle of Brandeis, Privacy, and Speech, 63 [small-caps]Vanderbilt L. Rev. [end-small-caps]1295 (2010) (recounting the history and the sociological context of the article).

[29]. Warren & Brandeis, supra note 28, at 205.

[30]. Julie E. Cohen, Examined Lives: Informational Privacy and the Subject as Object, 52 [small-caps]Stan. L. Rev. [end-small-caps]1373, 1426 (2000).

[31]. Anita L. Allen, Dredging up the Past: Lifelogging, Memory, and Surveillance, 75 [small-caps]U. Chi. L. Rev.[end-small-caps] 47, 61 (2008) (“The limitations of memory combined with practical barriers to efficient dredging once made it rational to predict that much of the past could be kept secret from people who matter.”).

[32]. Andrew Tutt, The Revisability Principle, 66 [small-caps]Hastings L.J. [end-small-caps]1113, 1116 (2015).

[33]. United States v. White, 401 U.S. 745, 787–88 (1971) (Harlan, J., dissenting); see also Talley v. California, 362 U.S. 60, 65 (1960) (“[F]ear of reprisal might deter perfectly peaceful discussions of public matters of importance.”).

[34]. Daniel J. Solove, A Taxonomy of Privacy, 154 [small-caps]U. Pa. L. Rev. [end-small-caps]477, 516 (2006) (describing harms from distortion).

[35]. See Dante D’Orazio, Unreleased Google Glass Enterprise Edition Headset Revealed in eBay Listing, [small-caps]Verge[end-small-caps] (Mar. 19, 2016, 4:23 PM), http://www.theverge.com/2016/3/19/11269910/google-glass-enterprise-edition-pictures-ebay-listing [https://perma.cc/RR87-L7LA].

[36]. See Honan, supra note 23 (describing Google Now).

[37]. Id.

[38]. See Nicholas P. Terry et al., Google Glass and Health Care: Initial Legal and Ethical Questions, 8 [small-caps]J. Health & Life Sci. L. [end-small-caps]93 (2015).

[39]. [small-caps]Henry Petroski, The Evolution of Useful Things [end-small-caps]22 (1992).

[40]. See John Biggs, Diet Robot, Autom, Now Available on Indiegogo, Will Ship in Six Months, [small-caps]TechCrunch [end-small-caps](Nov. 23, 2012), https://techcrunch.com/2012/11/23/diet-robot-autom-now-available-on-indiegogo-will-ship-in-six-months/ [https://perma.cc/XUL7-3KF6].

[41]. See Ariana Eunjung Cha, Medtronic, IBM Team up on Diabetes App to Predict Possibly Dangerous Events Hours Earlier, [small-caps]Wash. Post[end-small-caps] (Jan. 7, 2016), https://www.washingtonpost.com/news/to-your-health/wp/2016/01/07/medtronic-ibm-team-up-on-diabetes-app-to-predict-possibly-dangerous-events-hours-earlier/ [https://perma.cc/4K3U-Z237]. Even smartphones had around the time of Glass developed many medical apps that could measure glucose levels and even take ECGs without a doctor, which helped start a slow but steady increase in health outcomes and a decrease in medical costs. See [small-caps]Eric Topol, The Patient Will See You Now: The Future of Medicine Is in Your Hands[end-small-caps] 6, 174–78 (2015).

[42]. See generally Harry Surden & Mary-Anne Williams, How Self-Driving Cars Work (May 25, 2016) (unpublished manuscript), https://papers.ssrn.com/sol3/papers.cfm?abstract_id=2784465.

[43]. John Markoff, It’s an Unobtrusive Assistant Whispering in Your Ear (Not Little Brother), [small-caps]N.Y. Times[end-small-caps] (Dec. 31, 2015, 5:26 PM), http://bits.blogs.nytimes.com/2015/12/31/its-an-unobtrusive-assistant-whispering-in-your-ear-not-little-brother/?_r=0 [https://perma.cc/VCJ8-S3P6].

[44]. Id.

[45]. Kashmir Hill, Why ‘Privacy By Design’ Is the New Corporate Hotness, [small-caps]Forbes [end-small-caps](July 28, 2011, 1:23 PM), http://www.forbes.com/sites/kashmirhill/2011/07/28/why-privacy-by-design-is-the-new-corporate-hotness/#3c253d0277de.

[46]. [small-caps]Meyer-Schönberger[end-small-caps], supra note 9, at 171.

[47]. Ruby permitted third-party app developers to collect video data only if it was used in conjunction with Ruby’s face-blurring software.

[48]. Timothy Williams et al., Police Body Cameras: What Do You See?, [small-caps]N.Y. Times [end-small-caps](April 1, 2016), http://www.nytimes.com/interactive/2016/04/01/us/police-bodycam-video.html?_r=0.

[49]. Accidents or Unintentional Injuries, [small-caps]Ctrs. for Disease Control & Prevention[end-small-caps], http://www.cdc.gov/nchs/fastats/accidental-injury.htm [https://perma.cc/6G4R-W55E].

[50]. See Maria Bustillos, Little Brother Is Watching You, [small-caps]New Yorker[end-small-caps] (May 22, 2013), http://www.newyorker.com/tech/elements/little-brother-is-watching-you [https://perma.cc/V34A-E8ZR].

[51]. Larry Buchanan et al., What Happened in Ferguson?, [small-caps]N.Y. Times[end-small-caps] (Aug. 10, 2015), http://www.nytimes.com/interactive/2014/08/13/us/ferguson-missouri-town-under-siege-after-police-shooting.html.

[52]. Holly Yan, Attorney: New Audio Reveals Pause in Gunfire When Michael Brown Was Shot, CNN (Aug. 27, 2014, 12:42 PM), http://www.cnn.com/2014/08/26/us/michael-brown-ferguson-shooting/ [https://perma.cc/4QKS-MWRC].

[53]. Id.

[54]. Community Immunity (“Herd Immunity”), [small-caps]Vaccines[small-caps], http://www.vaccines.gov/basics/protection/ [https://perma.cc/8T3W-42VQ].

[55]. Stationary cameras merely “displace[d]” crime from areas near cameras to areas without cameras. [small-caps]Jennifer King et al., CITRIS Report: The San Francisco Community Safety Camera Program[end-small-caps] 11 (2008), https://www.wired.com/images_blogs/threatlevel/files/sfsurveillancestudy.pdf [https://perma.cc/EDS3-TBVS]. However, some studies found that even the stationary cameras reduced crime. [small-caps]Marcus Nieto, Cal. Research Bureau, Public Video Surveillance: Is it an Effective Crime Prevention Tool?[end-small-caps] (1997).

[56]. For a thorough and nuanced account of the limits of cost-benefit analysis in the counter-terrorism context, see Katherine Strandburg, Cost-Benefit Analysis, Precautionary Principles, and Counterterrorism Surveillance, [small-caps]U. Chi. L. Rev.[end-small-caps] (forthcoming 2016) (manuscript at 21–22).

[57]. See [small-caps]Dave Eggers, The Circle[end-small-caps] (2013) (describing the chaos and personal stress caused by a device called the “SeeChange” that constantly records and publicly discloses the wearer’s experience).

[58]. See Rebecca Hawkes, Whatever Happened to Star Wars Kid? The Sad but Inspiring Story Behind One of the First Victims of Cyberbullying, [small-caps]Telegraph[end-small-caps] (May 4, 2016, 5:52 PM), http://www.telegraph.co.uk/films/2016/05/04/whatever-happened-to-star-wars-kid-the-true-story-behind-one-of/ [https://perma.cc/N7HS-MHXG].

[59]. Incidence of revenge pornography—the practice of sharing photographs and videos of intimacy without the subject’s consent—also increased for a short time, although the problem predated the Ruby and was curbed to some extent by federal criminal legislation. Steven Nelson, Congress Set to Examine Revenge Porn, [small-caps]U.S. News [end-small-caps](July 30, 2015, 11:32 AM), http://www.usnews.com/news/articles/2015/07/30/congress-set-to-examine-revenge-porn [https://perma.cc/E9HX-DAJG].

[60]. Stephanie Kanowitz, Police Video Redaction: Where Big Data Meets Privacy, GCN (Mar. 18, 2016) (describing face-blurring), https://gcn.com/articles/2016/03/18/video-redaction-tech.aspx [https://perma.cc/N22E-48KT].

[61]. Jane Bambauer, Glass Half Empty, 64 [small-caps]UCLA L. Rev. Disc. [end-small-caps](2016).

[62]. The Hawthorne Effect is a phenomenon in industrial research in which observed subjects increase their work productivity because of the increased attention that is paid to them. See generally Steven D. Levitt & John A. List, Was There Really a Hawthorne Effect at the Hawthorne Plant? An Analysis of the Original Illumination Experiments, 3 [small-caps]Am. Econ. J.: Applied Econ. [end-small-caps]224 (2011).

[63]. Id. at 231.

[64]. In a study of video monitoring at over 400 restaurants, the intervention not only reduced theft, it also improved service and increased the tips that the employees received. Other studies from around the same time found that transparency had more of a positive effect than a punitive one: supervisors and customers alike valued the work of employees more when they saw what they were doing. Ethan Bernstein, How Being Filmed Changes Employee Behavior, [small-caps]Harv. Bus. Rev. [end-small-caps](Sept. 12, 2014), https://hbr.org/2014/09/how-being-filmed-changes-employee-behavior [https://perma.cc/EDC3-22E9].

[65]. [small-caps]Mayer-Schönberger[end-small-caps], supra note 9, at 1–2.

[66]. [small-caps]Solove,[end-small-caps] supra note 8, at 95–96.

[67]. In fact, even the Stacy Snyder case seems to have been much more complicated than the popular story suggests—Snyder’s performance reviews had already been tarnished with complaints of her lack of professionalism and poor knowledge of the subject matter she was teaching. Julian Sanchez, Court Rejects Appeal Over Student-Teacher Drunk MySpace Pics, [small-caps]ArsTechnica[end-small-caps] (Dec. 5, 2008, 8:01 AM), http://arstechnica.com/tech-policy/2008/12/court-rejects-appeal-over-student-teacher-drunk-myspace-pics/ [https://perma.cc/U7YR-AVP2].

[68]. See Bernstein, supra note 64.

[69]. See Tom Jackman, Could Cameras in Operating Rooms Reduce Preventable Medical Deaths?, [small-caps]Wash. Post[end-small-caps] (Aug. 25, 2015), https://www.washingtonpost.com/local/could-cameras-in-operating-rooms-reduce-preventable-medical-deaths/2015/08/25/fc2696c4-3ae2-11e5-b3ac-8a79bc44e5e2_story.html [https://perma.cc/SVM7-6DNB].

[70]. Jessica Winter, The Advantages of Amnesia, [small-caps]Boston Globe[end-small-caps] (Sept. 23, 2007), http://archive.boston.com/news/globe/ideas/articles/2007/09/23/the_advantages_of_amnesia [https://perma.cc/92JZ-KZ63].

[71]. These bills closely resembled European privacy law that continues to restrict the use of Ruby devices on that continent. Directive 95/46/EC of the European Parliament and of the Council of 24 October 1995 on the Protection of Individuals With Regard to the Processing of Personal Data and on the Free Movement of Such Data, [small-caps]Official J. Eur. Communities [end-small-caps]No. L 281/31 (1995), http://ec.europa.eu/justice/policies/privacy/docs/95-46-ce/dir1995-46_part1_en.pdf.

[72]. 18 U.S.C. § 2703 (a), (b) (requiring a warrant to access the contents of communications held in electronic storage for less than six months, but requiring only a subpoena or special order for contents held more than six months or held for storage purposes rather than for communications routing purposes).

[73]. See, e.g., Daily Times Democrat v. Graham, 276 Ala. 380 (1964).

[74]. Solove, supra note 34, at 536.

[75]. [small-caps]Restatement (Second) of Torts[end-small-caps] § 652D (Am. Law Inst. 1977).

[76]. Indeed, the company was already subject to a 20-year consent order due to previous privacy actions.

[77]. See, e.g., [small-caps]Mayer-Schönberger[end-small-caps], supra note 9, at 138 (“Comprehensive information privacy legislation is the exact opposite: most citizens perceive to gain a little (but too little to be motivated to act), while the motivated and well-funded group of information processors fear they will lose a lot.”); Charles Arthur, Google Glass: Is It a Threat to Our Privacy?, [small-caps]Guardian[end-small-caps] (Mar. 6, 2013, 10:08 EST), https://www.theguardian.com/technology/2013/mar/06/google-glass-threat-to-our-privacy [https://perma.cc/JT9R-V42Y] (“Of course, the benefits wouldn’t accrue to the wearer.”).

[78]. See Jane Bambauer, Other People’s Papers, 94 [small-caps]Tex. L. Rev.[end-small-caps] 205, 210–11 (2015) (describing importance of filterability).

[79]. See Samuel Wiseman, Pretrial Detention and the Right to Be Monitored, 123 [small-caps]Yale L.J. [end-small-caps]1344 (2014).

[80]. For another account of the pessimism in health care innovations, see [small-caps]Topol[end-small-caps], supra note 41.

[81]. [small-caps]Helen Nissenbaum, Privacy in Context: Technology, Policy, and the Integrity of Social Life[end-small-caps] 15 (2010) (“To avoid the charge of stodginess and conservatism, it needs to incorporate ways not only to detect whether practices run afoul of entrenched norms but to allow that divergent practices may at times be ‘better’ than those prescribed by existing norms.”).

[82]. Id.

[83]. Bartnicki v. Vopper, 532 U.S. 514, 525 (2001).

[84]. Id. at 527 (quoting Smith v. Daily Mail Publ’g Co., 443 U.S. 97, 102 (1979)).

[85]. Even I had made this mistake once upon a time. Jane Bambauer, The New Intrusion, 88 [small-caps]Notre Dame L. Rev. [end-small-caps]205, 206–07 (2012).

[86]. Jeffrey Rosen, The Right to Be Forgotten, 64 [small-caps]Stan. L. Rev. Online[end-small-caps] 88 (2012).

[87]. Warren & Brandeis, supra note 28, at 193.

[88]. A Google search gives credit to both.